Hello World

My name is Rui Sun (Rui is pronounced as Ray). I am a CS PhD student at UCLA NLP advised by Prof. Kai-Wei Chang. I received my master's degree from Columbia University (Research Specialization, Advisor: Prof. Shih-Fu Chang).

Prior to that, I received my bachelor's degree from Xidian University, where I worked with Prof. Nannan Wang.

My research interests are Vision-Language Multimodal Learning, Natural Language Processing, and Computer Vision.

I am fortunate enough to work with brilliant mentors and advisors. You can find my work experience and their names in Experience section.

Multimodal Learning is a broad topic, if you would like to know more about what I am doing and what I did, please jump to Publication or Experience section.

[GitHub]

[LinkedIn]

[Semantic Scholar]

[Twitter]

[Email] (Please contact me via UCLA email address; I seldom check previous Columbia email)

If you would like to know more about me, you could view the source code of this webpage for more information. (Right-click the page and select View Page Source)

Last Update: 09/19/2025

Recent News (08/2025,09/2025):

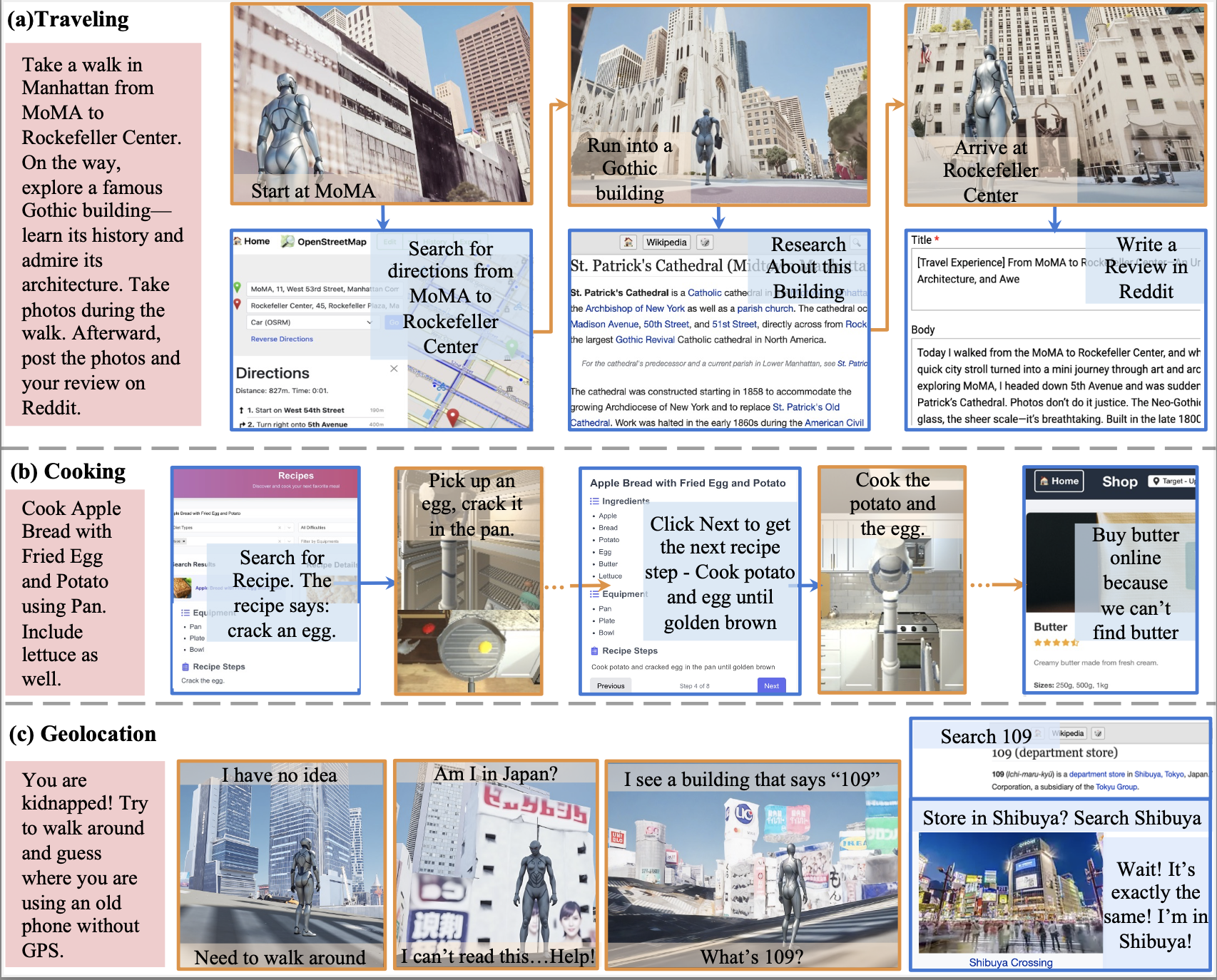

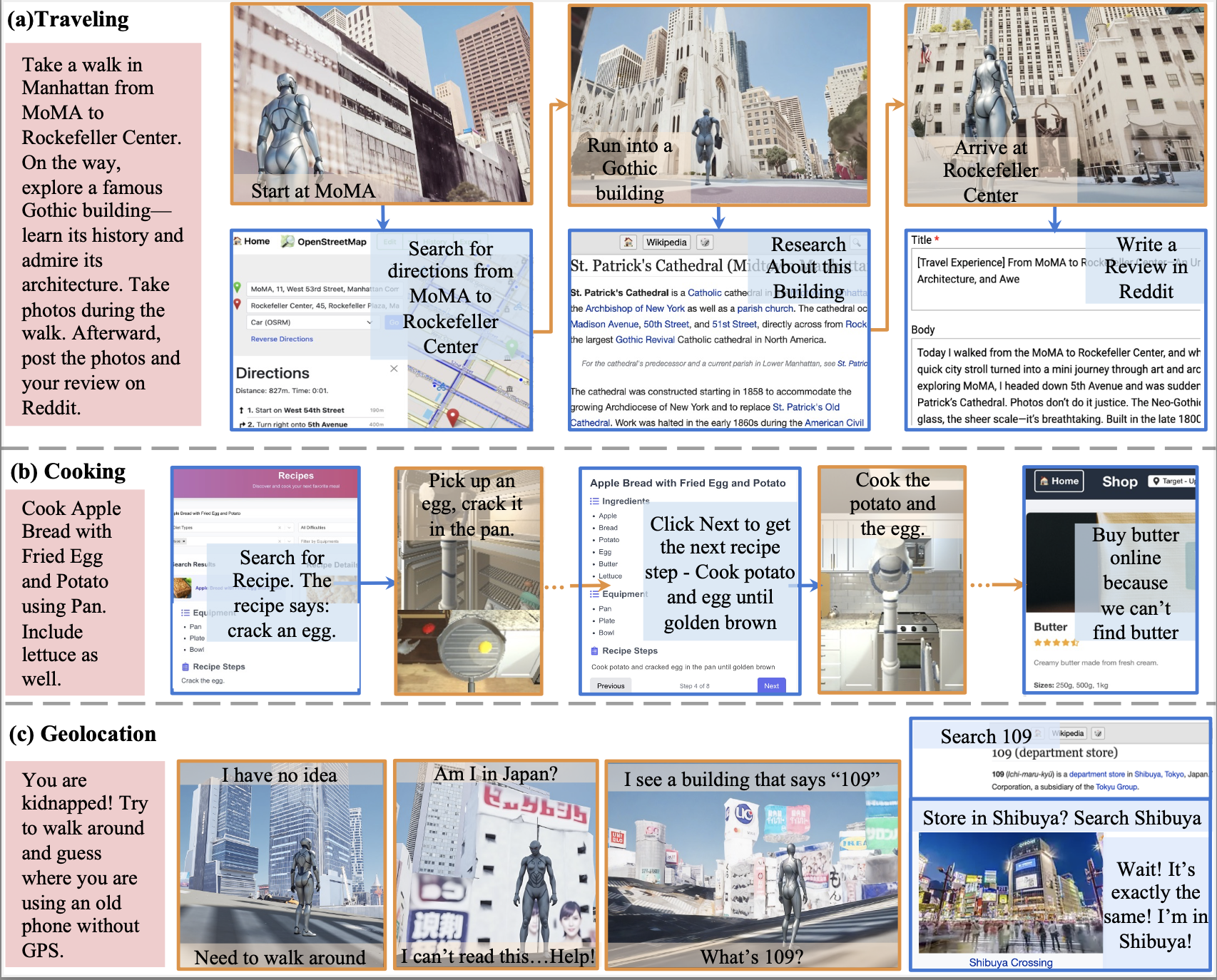

One paper on Embodied Web Agent is accepted by NeurIPS 2025 Datasets and Benchmarks Track as a Spotlight paper. This paper concretizes my current research roadmap: a Multimodal Language Agent should be able to tackle tasks in both the digital world and the real world, freely moving between these environments to achieve unified automation. Feel free to have a look at our work!

One proposal on Multimodal Language Agent was funded by Amazon Science Hub (advised by Prof. Kai-Wei Chang)

Publications and Preprints

*, ** denotes equal contribution (co-first and co-second authorship)

EMBODIED WEB AGENTS: Bridging Physical-Digital Realms for Integrated Agent Intelligence

NeurIPS 2025 Datasets and Benchmarks Track (Spotlight, Top 3%)

Yining Hong*, Rui Sun*, Bingxuan Li**, Xingcheng Yao**, Maxine Wu**, Alexander Chien**, Da Yin, Ying Nian Wu, Zhecan James Wang, Kai-Wei Chang

[Paper]

[Project Page][Code] [Twitter]

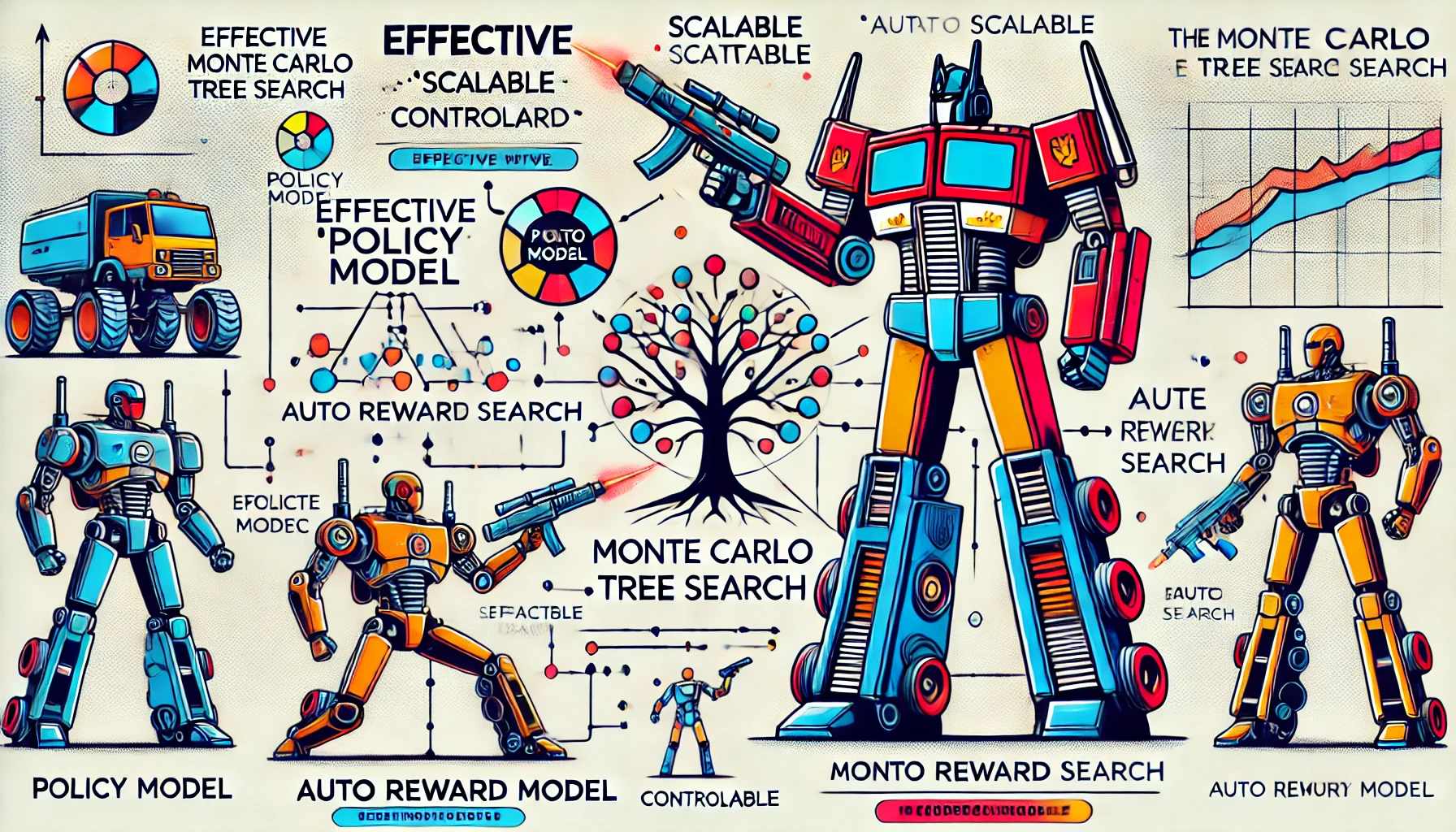

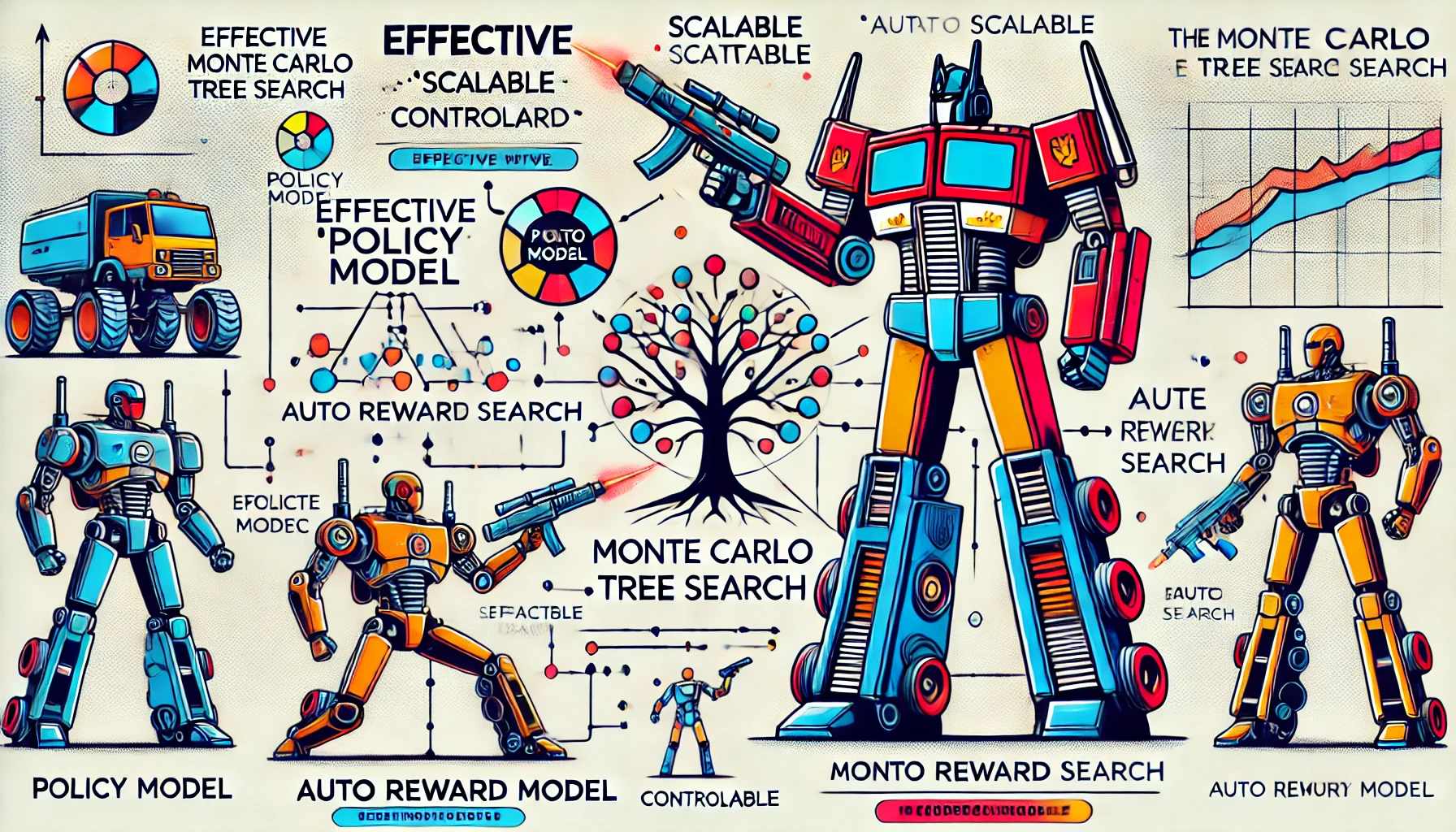

ARMAP: Scaling Autonomous Agents via Automatic Reward Modeling And Planning

ICLR 2025

Zhenfang Chen*, Delin Chen*, Rui Sun*, Wenjun Liu*, Chuang Gan

[Paper] [Paper List of Inference/Test Time Scaling/Computing]

[Project Page][Code] [Twitter]

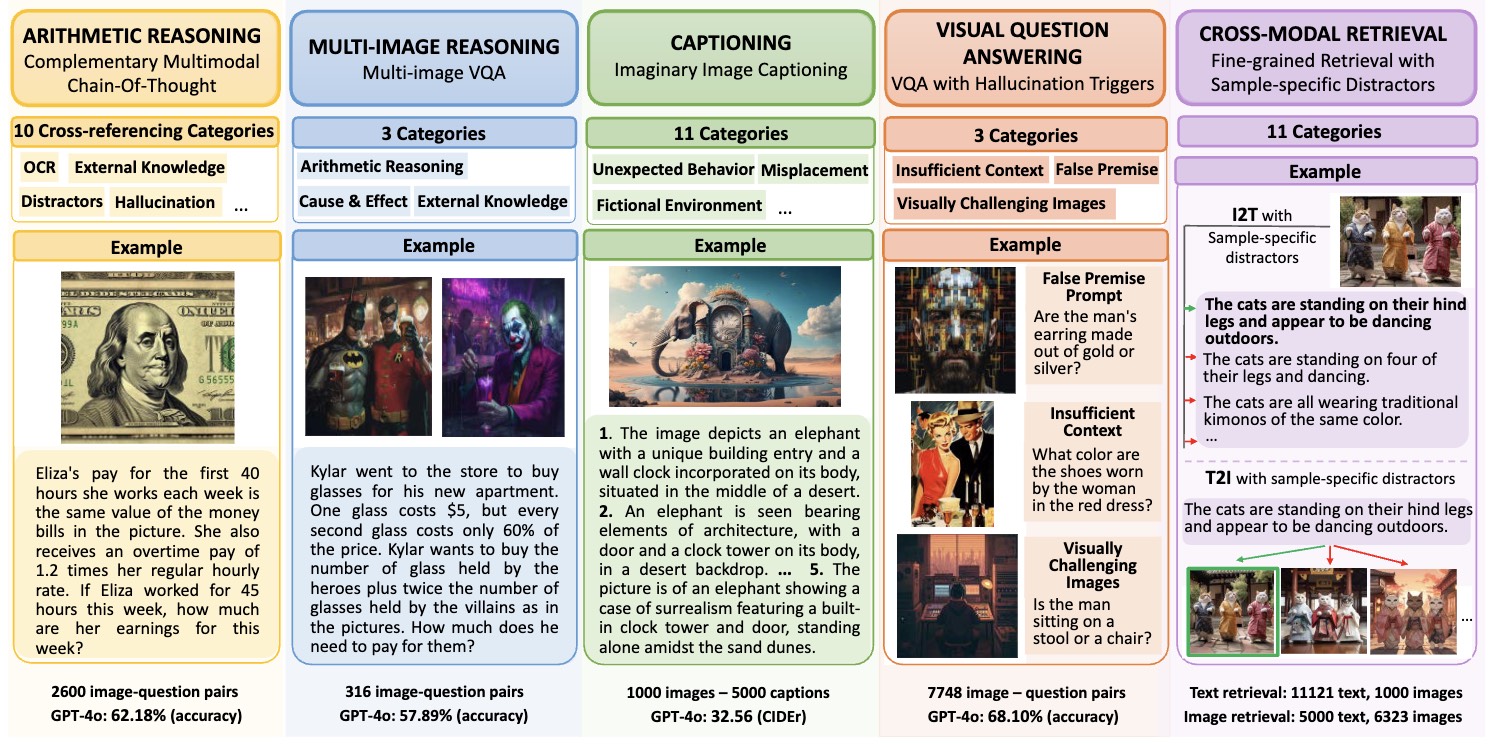

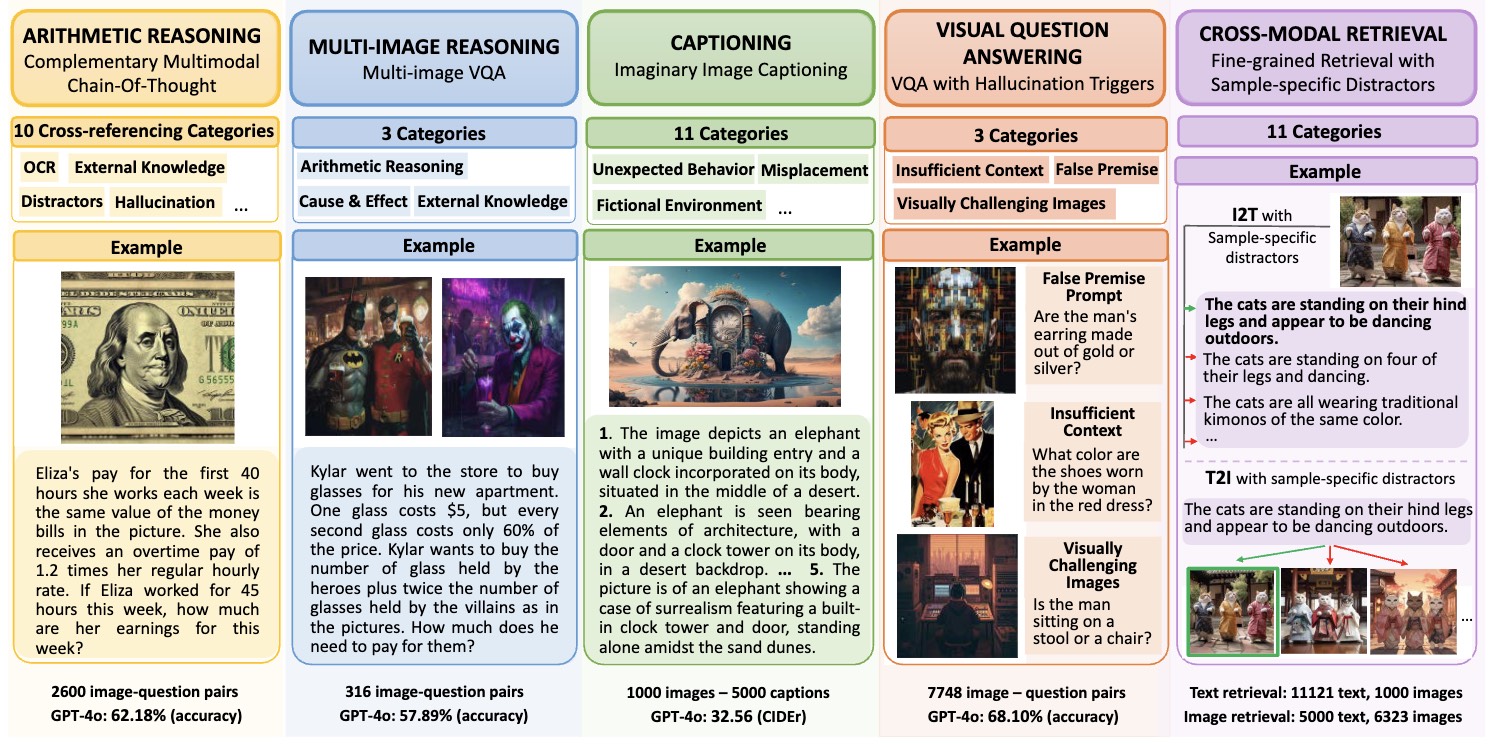

JourneyBench: A Challenging One-Stop Vision-Language Understanding Benchmark of Generated Images

NeurIPS 2024 Datasets and Benchmarks Track

Zhecan Wang*, Junzhang Liu*, Chia-Wei Tang, Hani Alomari, Anushka Sivakumar, Rui Sun, Wenhao Li, Md. Atabuzzaman, Hammad Ayyubi, Haoxuan You, Alvi Md Ishmam, Kai-Wei Chang, Shih-Fu Chang, Chris Thomas

[Paper] [Project Page][Code] [量子位] [Twitter]

Detecting Multimodal Situations with Insufficient Context and Abstaining from Baseless Predictions

ACM MM 2024 (long)

Junzhang Liu*, Zhecan Wang*, Hammad Ayyubi*, Haoxuan You, Chris Thomas, Rui Sun, Shih-Fu Chang, Kai-Wei Chang

[Paper][Code]

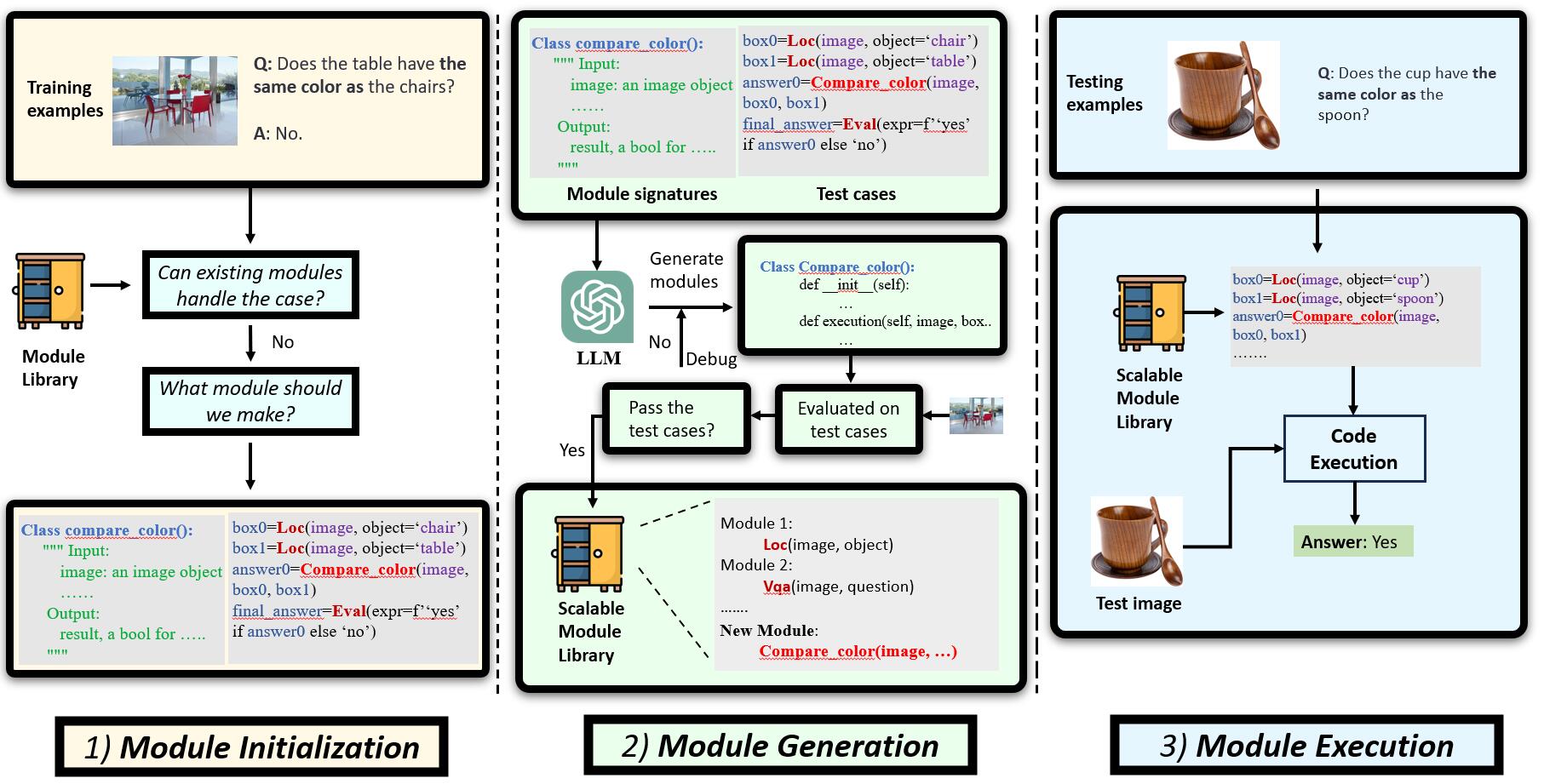

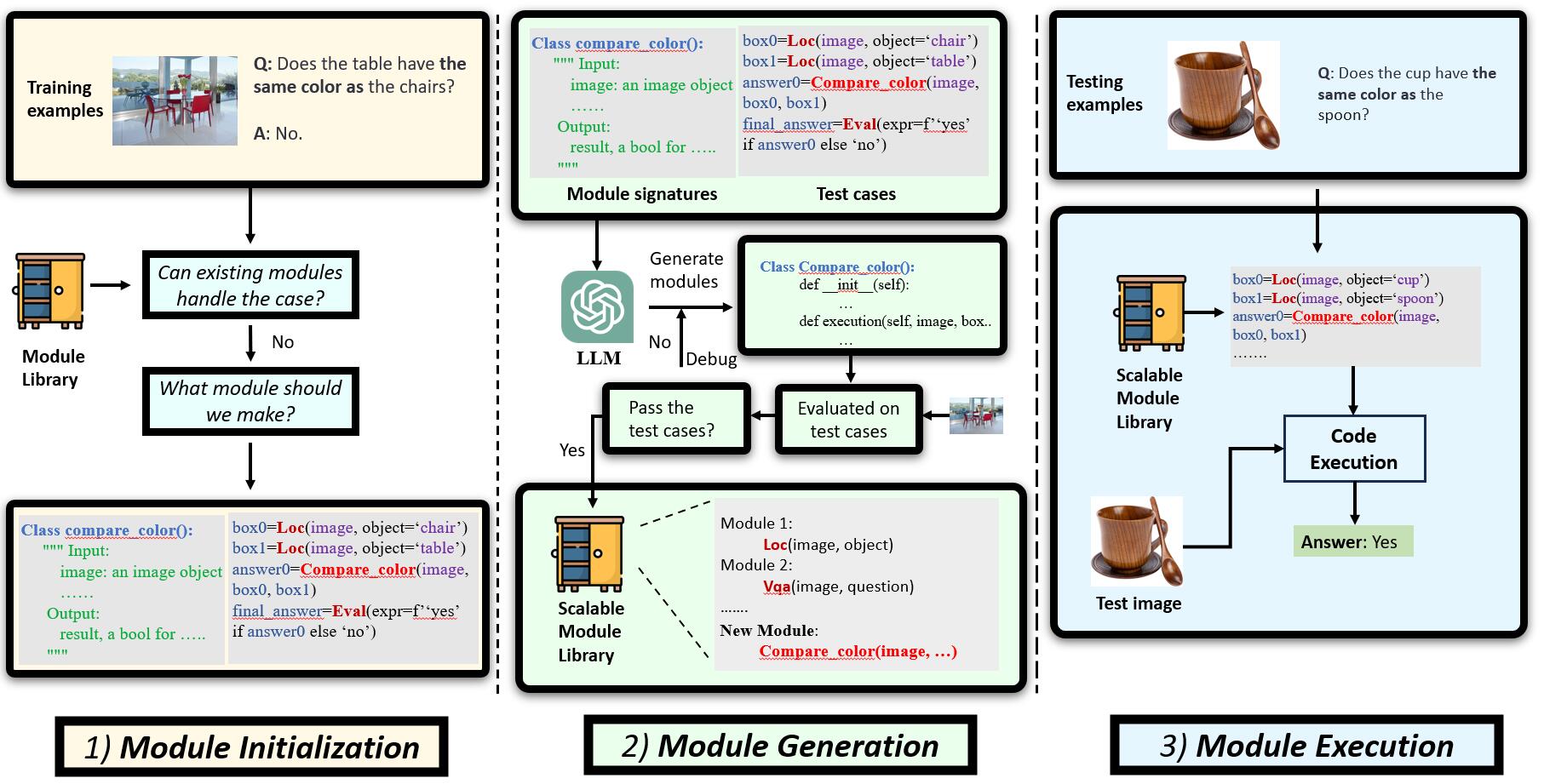

GENOME: Generative Neuro-Symbolic Visual Reasoning by Growing and Reusing Modules

ICLR 2024

Zhenfang Chen*, Rui Sun*, Wenjun Liu*, Yining Hong, Chuang Gan

[Paper] [Project Page][Video Demo][Code] [Twitter]

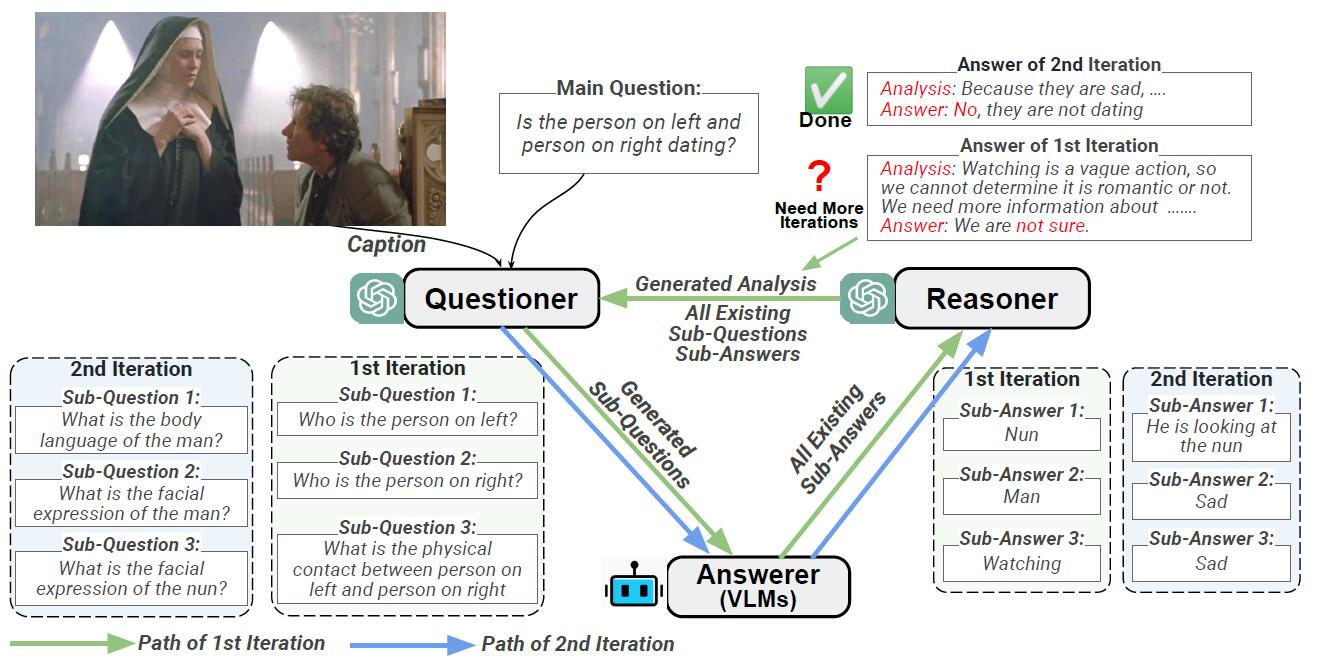

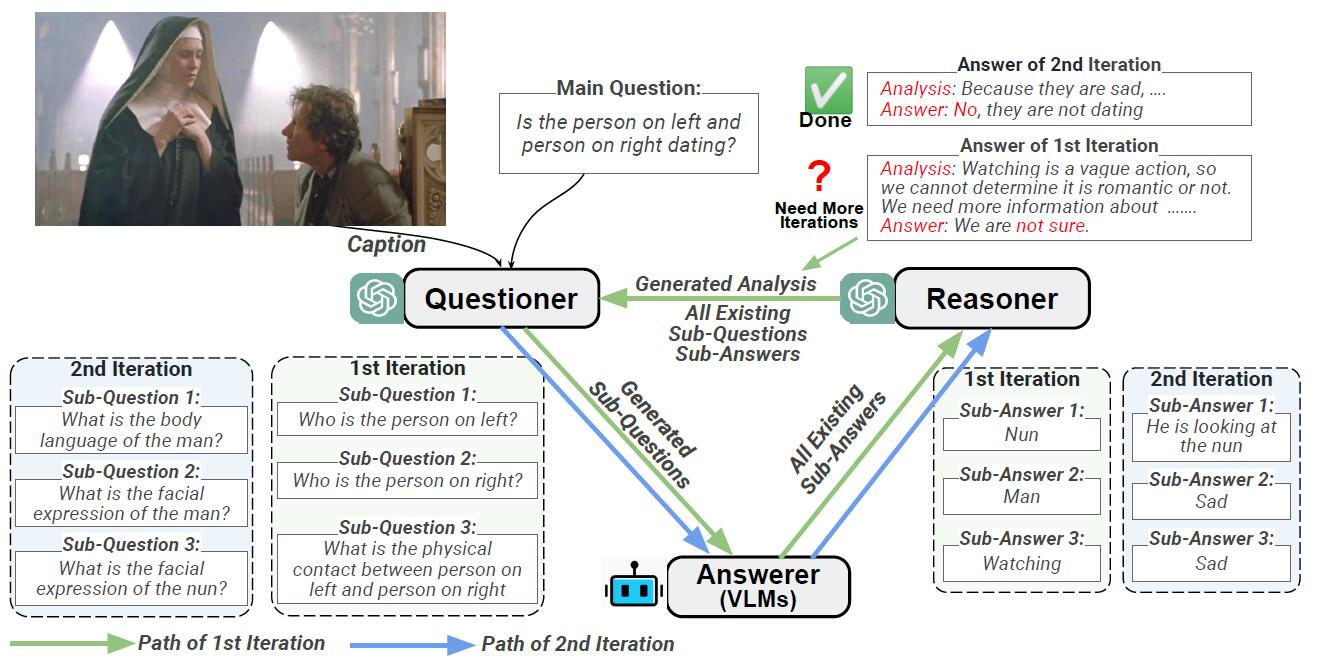

IdealGPT: Iteratively Decomposing Vision and Language Reasoning via Large Language Models

Findings of EMNLP 2023 (long)

Haoxuan You*, Rui Sun*, Zhecan Wang*, Long Chen, Gengyu Wang, Hammad A. Ayyubi, Kai-Wei Chang, Shih-Fu Chang

[Paper][Code]

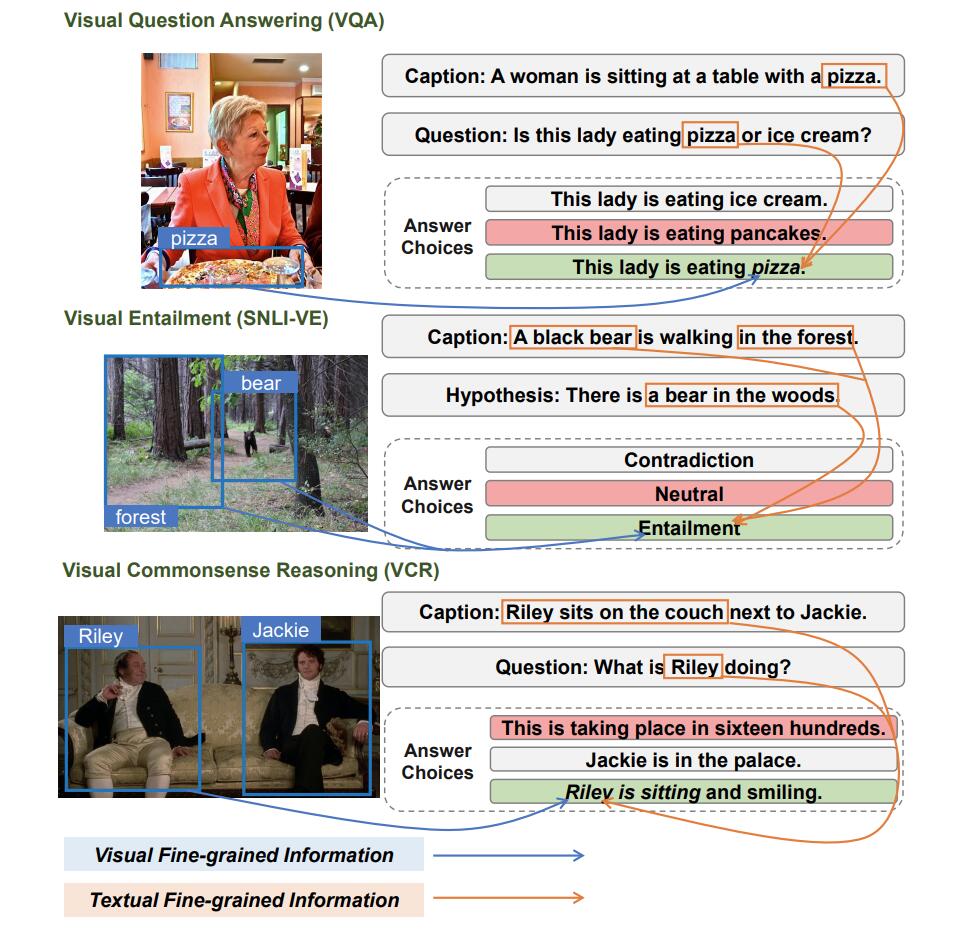

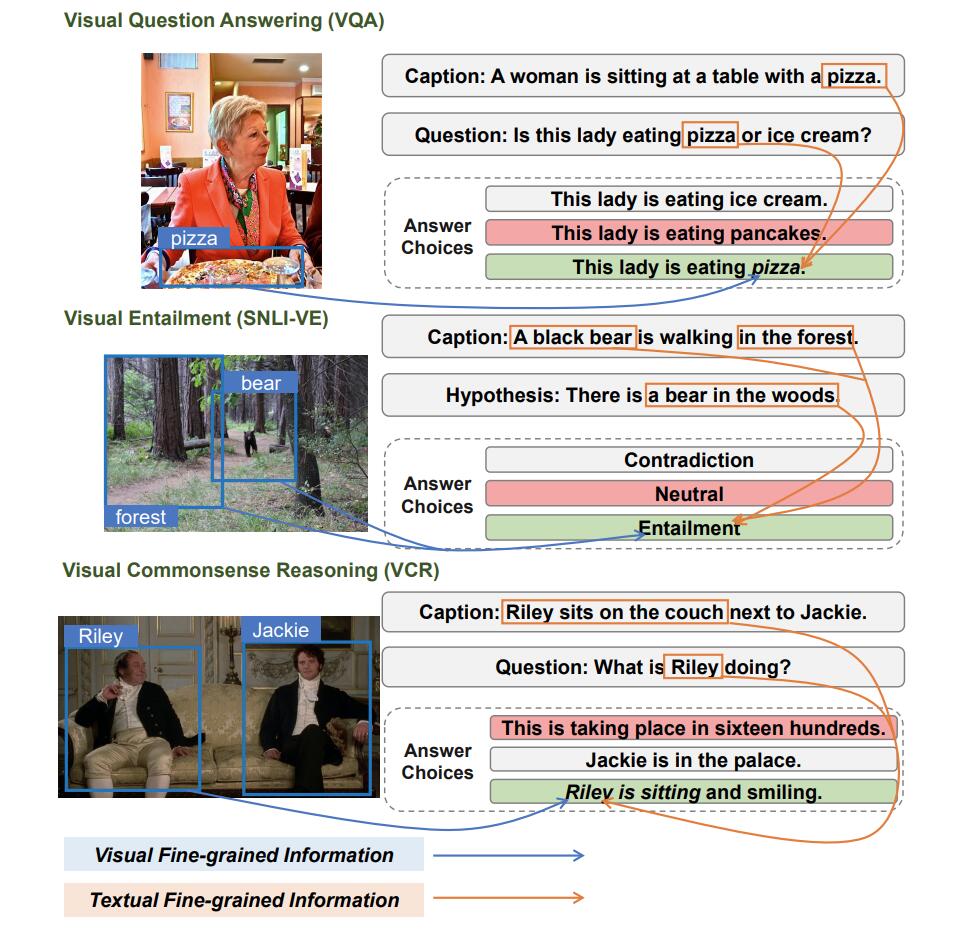

UniFine: A Unified and Fine-grained Approach for Zero-shot Vision-Language Understanding

Findings of ACL 2023 (long)

Rui Sun*, Zhecan Wang*, Haoxuan You*, Noel Codella, Kai-Wei Chang, Shih-Fu Chang

[Paper][Code]

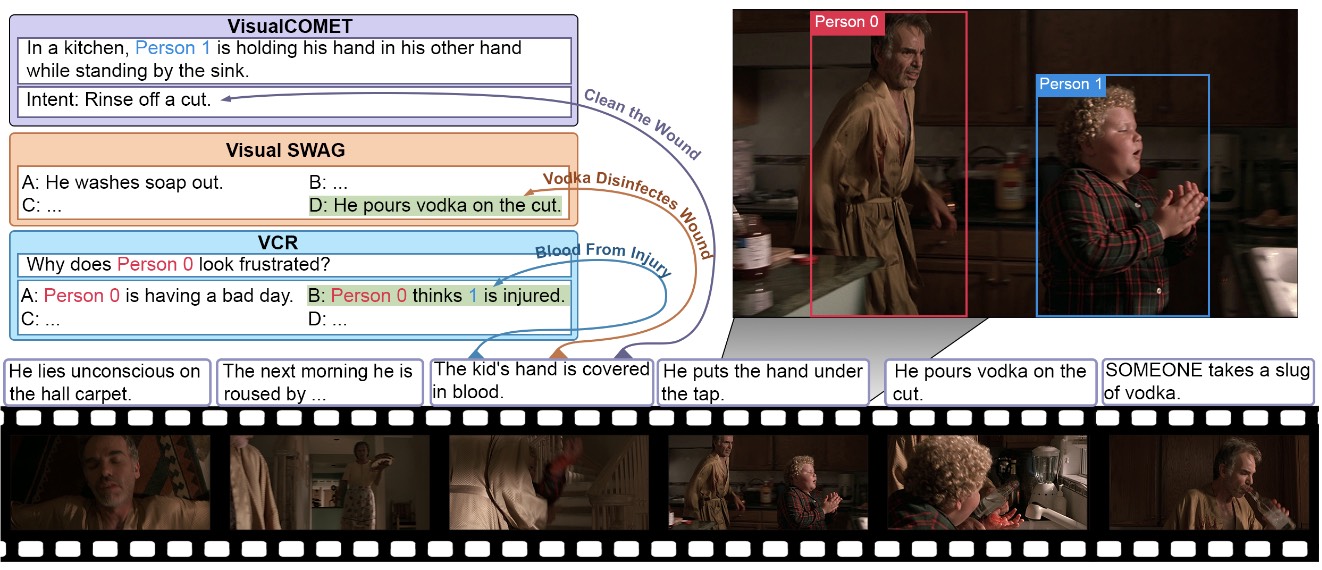

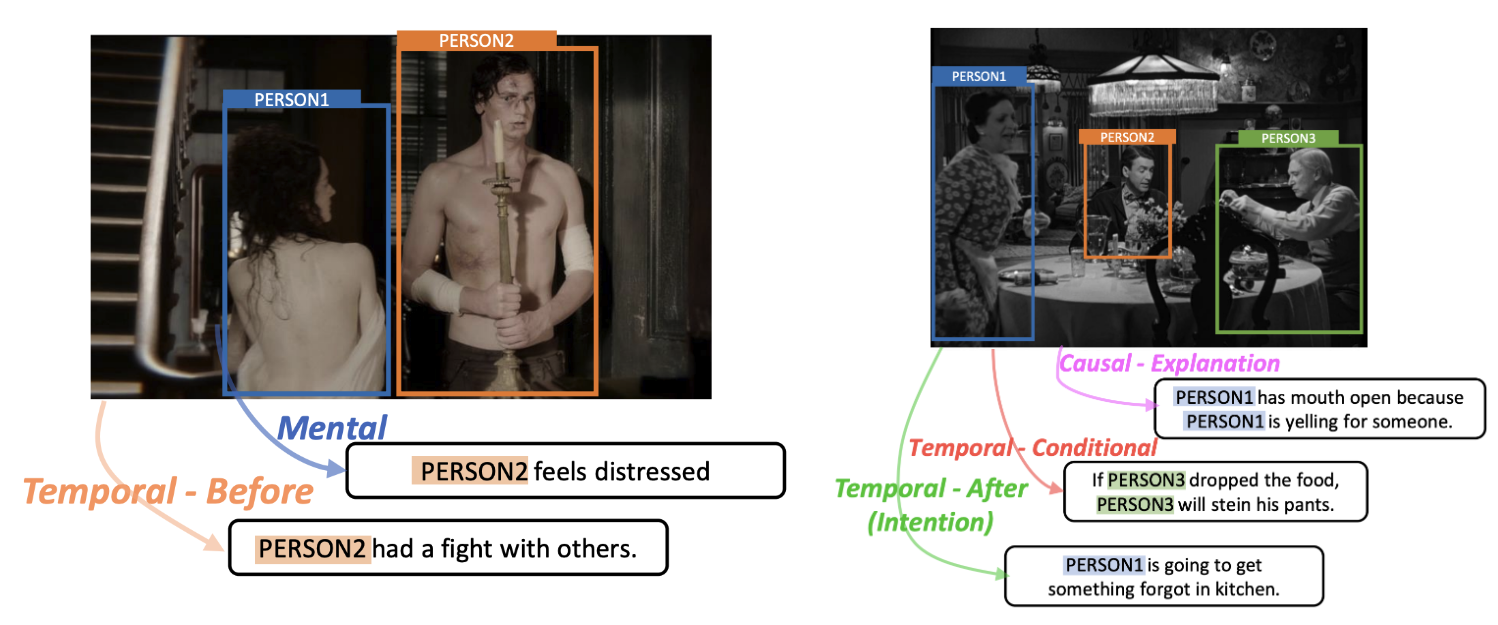

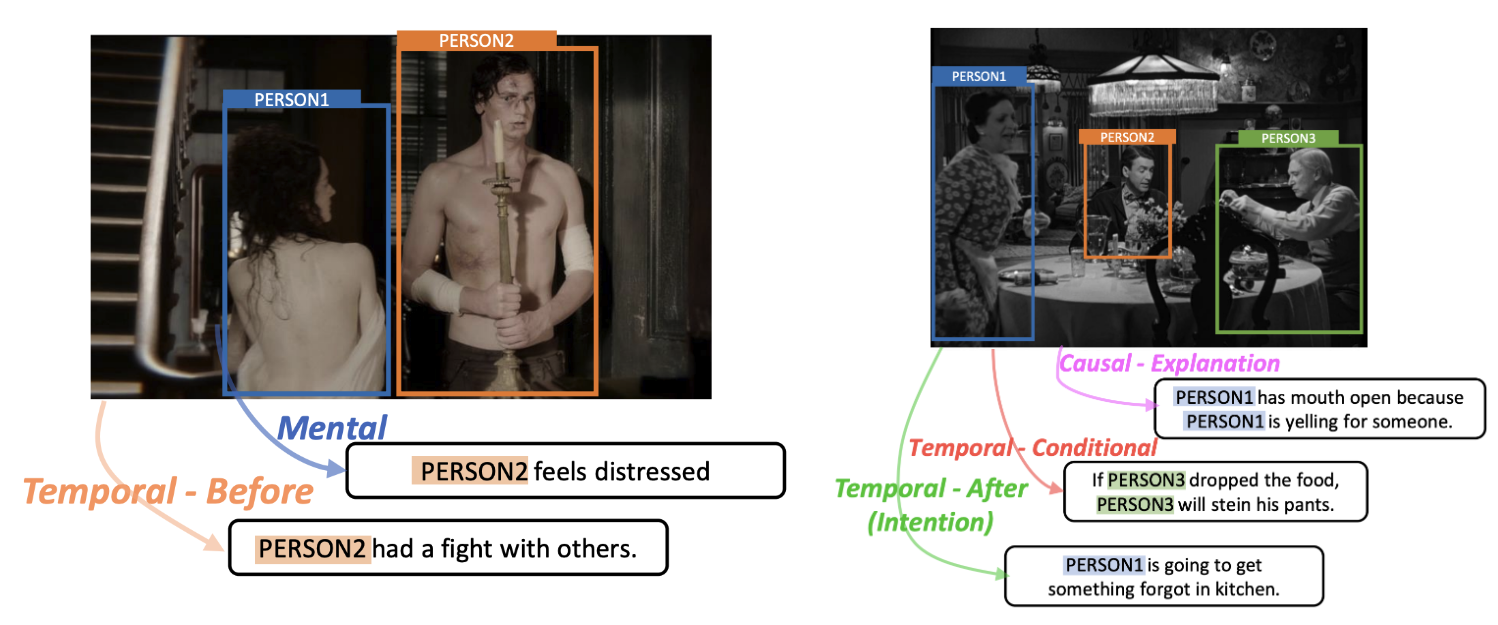

Find Someone Who: Visual Commonsense Understanding in Human-Centric Grounding

Findings of EMNLP 2022 (long)

Haoxuan You, Rui Sun, Zhecan Wang, Kai-Wei Chang, Shih-Fu Chang

[Paper][Code]

Technical Reports

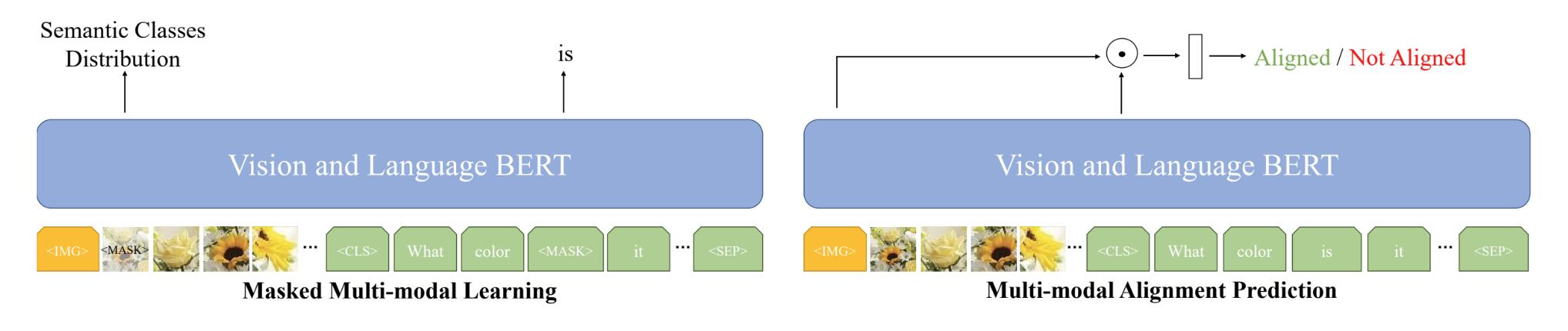

An empirical study of QA-oriented pretraining

Rui Sun

[GitHub Repo]

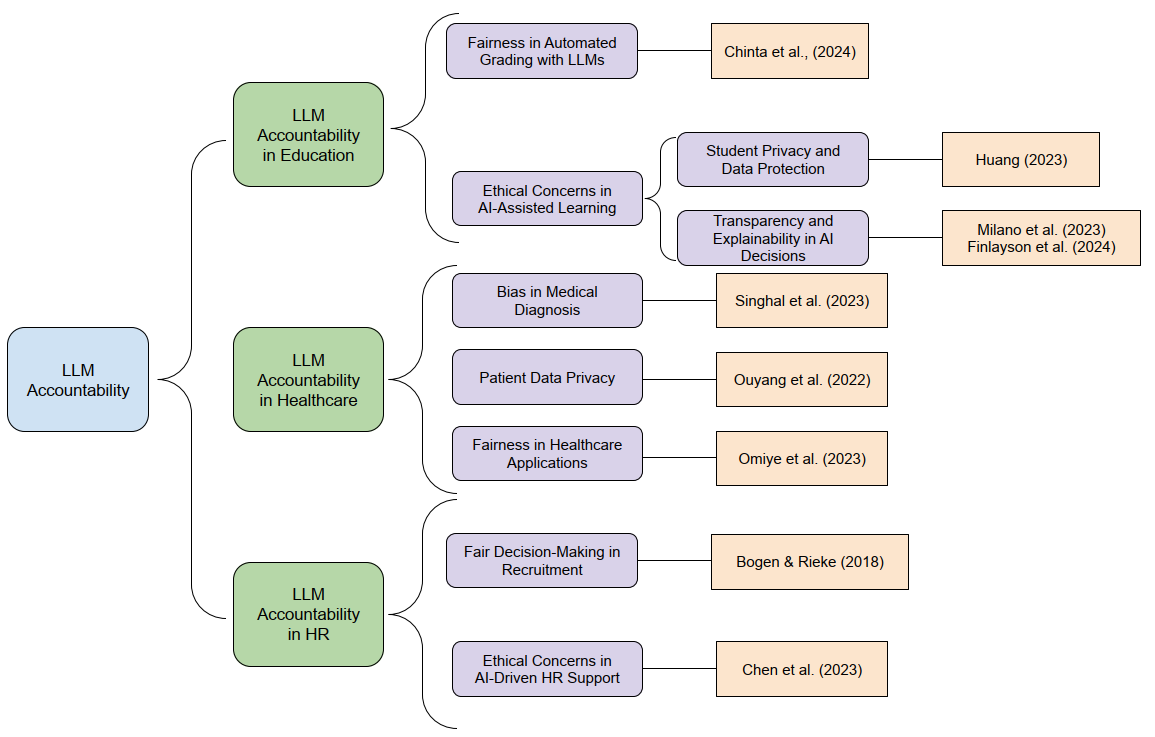

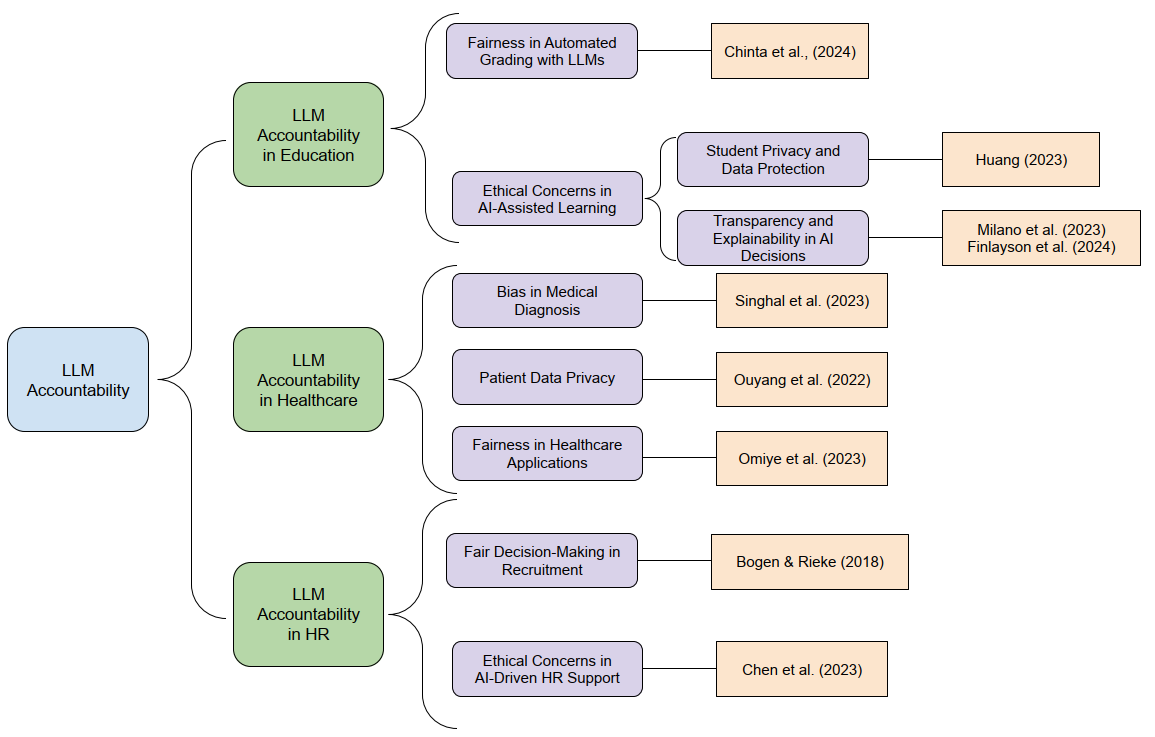

A Survey of LLM Accountability in Education, Healthcare, and Human Resources

Abdolrahim Arjomand, Oscar Granadino Horvath, Rui Sun†, Weihan Qu († denotes correspondence author)

[Report] [Slide]

Experience

UCLA NLP, Research Assistant

Advisor: Prof. Kai-Wei Chang, Sep 2024 -- Present

Multimodal Language Agent (Computer Use Agent and Embodied Agent)

- Embodied Web Agent (Mentor: Dr. Yining Hong) (NeurIPS 2025 Datasets and Benchmarks Track (Spotlight))

Microsoft Research, Redmond (Deep Learning Group), Research Intern

Advisor & Mentor: Dr. Hao Cheng, Dr. Baolin Peng, Dr. Reuben Tan, Dr. Jianfeng Gao, Prof. Kai-Wei Chang, Jun 2025 -- Present

Computer Use Agent

MIT-IBM Watson AI Lab, Research Assistant

Advisor & Mentor: Dr. Zhenfang Chen, Prof. Chuang Gan, Jun 2023 -- Oct 2024

Inference Time Scaling for Language Agent with Monte Carlo Tree Search (ICLR 2025)

Neuro-Symbolic Visual Reasoning (ICLR 2024)

Digital Video and Multimedia (DVMM) Lab, Columbia University, Research Assistant

Advisor & Mentor: Dr. Haoxuan You, Dr. Zhecan Wang, Prof. Long Chen, Prof. Shih-Fu Chang, Prof. Kai-Wei Chang, Oct 2021 -- Jun 2023, Oct 2023 -- Jun 2024

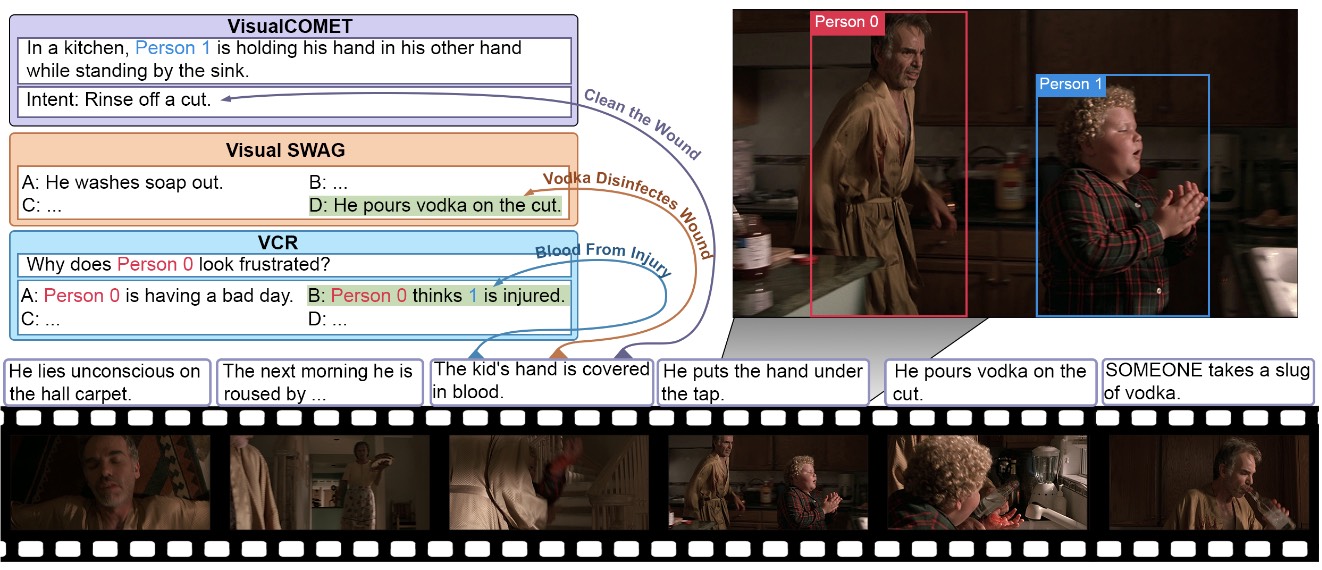

Vision and Language Understanding Evaluation (NeurIPS 2024 Datasets and Benchmarks Track)

Vision Language Models Hallucination Mitigation (ACM MM 2024)

Large Language Model Aided Visual Reasoning (Findings of EMNLP 2023)

Zero-shot Vision-Language Understanding (Findings of ACL 2023)

Human-centric Visual Commonsense Grounding Dataset (Findings of EMNLP 2022)

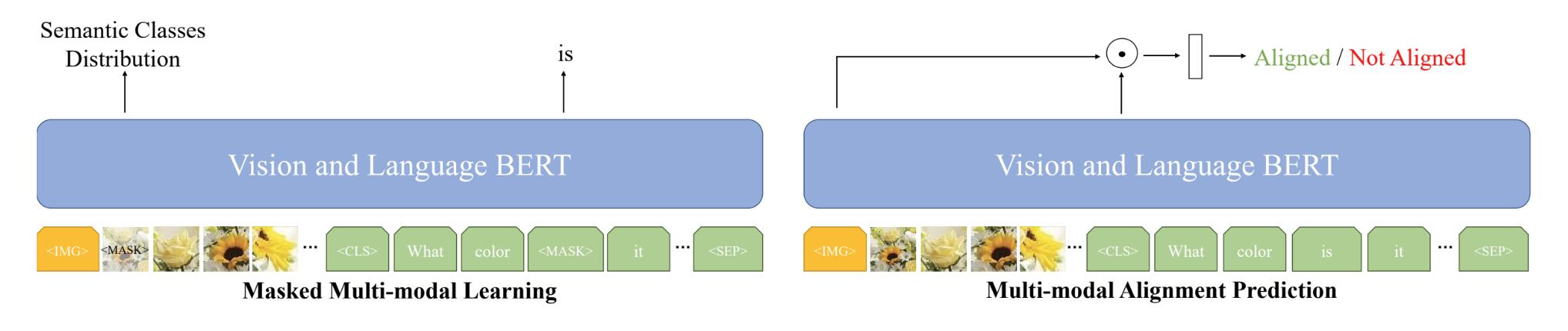

Task-oriented Pretraining (Second-stage Pretraining) of Vision-Language Pretrained Models (Technical Report)

Columbia NLP, Research Assistant

Advisor: Prof. Zhou Yu, Sep 2023 -- Mar 2024

Fine-grained and Explainable Visual Categorization (More details can be found in this paper in ACM MM)

Rehab Engineering Alliance & Center Transforming Low Vision, NYU Langone Health, Research Assistant

Advisor: Prof. JohnRoss Rizzo, Prof. Zhou Yu, Jun 2023 -- Aug 2023

AI for Social Good: Deploy Vision-Language & Large Language Models in wearable devices for Blindness and Low Vision People (More details can be found in this page)

Intelligent Information Processing (IIP) Lab, Xidian University, Research Assistant

Advisor & Mentor: Prof. Jingwei Xin, Prof. Nannan Wang, Sep 2020 -- Jul 2021

Human Face Frontalization and Hallucination (More details can be found in this paper in IEEE TCSVT)

Honors & Awards

Research fund from Amazon Science Hub (advised by Prof. Kai-Wei Chang), 2025

Research fund from Google Research Scholar Program (led by Prof. Chris Thomas), 2025

Interdisciplinary Contest in Modeling (ICM), Meritorious Winner, 2018

Service

Reviewer

NLP: EMNLP, EACL, NAACL, ACL Rolling Review (ARR), ACL

AI: AAAI

Multimedia: ACM MM

ML: ICLR, NeurIPS Dataset & Benchmark Track, NeurIPS Postion Paper Track

Journal: JAIR

Workshop: Scaling Environments for Agents (SEA), NeurIPS 2025 Workshop

Trust Before Use: Building Foundation Models that You Can Trust (T2FM), ICCV 2025 Workshop

Teaching Assistant at UCLA

CS M146 Introduction to Machine Learning, working with Prof. Kai-Wei Chang, Fall 2025

Teaching Assistant at Columbia

EECS 6699 Mathematics of Deep Learning, worked with Prof. Predrag Jelenkovic, Spring 2024

COMS 4995 Deep Learning for Computer Vision, worked with Prof. Peter Belhumeur, Fall 2023

ELEN 4815 Random Signals and Noise, worked with Prof. Irving Kalet, Spring 2023

COMS 4995 Neural Networks & Deep Learning, worked with Prof. Richard Zemel, Fall 2022

COMS 4732 Computer Vision II, worked with Prof. Carl Vondrick, Spring 2022

Misc

Students worked/working with me (I feel fortunate to work with these talented students)

Maxine Wu (co-mentor with Dr. Yining Hong, UCLA Undergraduate Student)

Alexander Chien (co-mentor with Dr. Yining Hong, UCLA Undergraduate Student)

Photos

Los Angeles, CA, 2024 Fall, Royce Hall

Los Angeles, CA, 2024 Fall, Getty Center

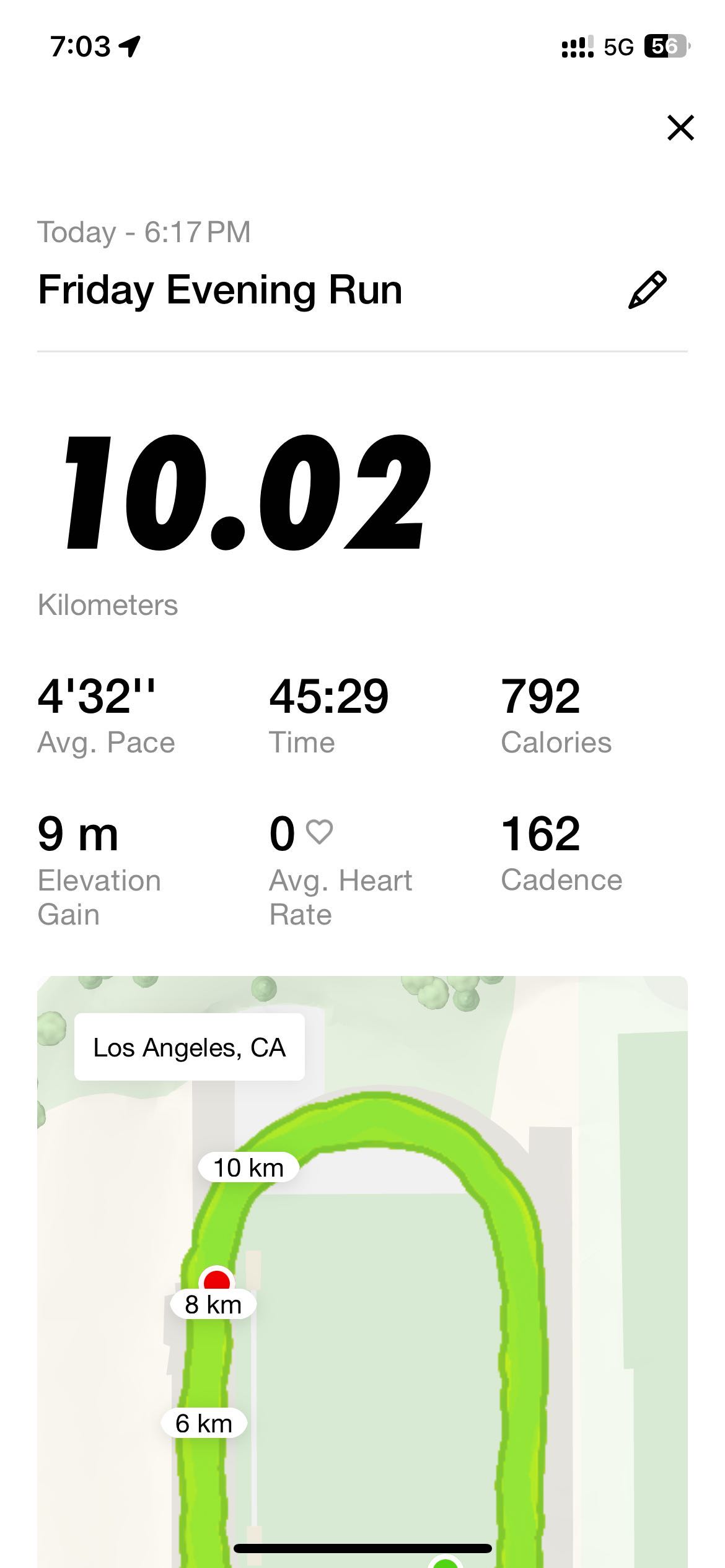

Drake Stadium, CA, 2024 Fall, My Best 10KM Record

Old Photos